Chapter 6 is devoted to what the author calls The Risk Landscape. In it he engages in a first attempt at putting together all the basic information about existential risks from the previous chapters and moving with global quantification of those risks and suggestions for a framework on how to act on them.

This is, up to know, the most strongly coded EA chapter in the book, and it goes down their typical rabbit hole of effective allocation of resources (in this case, in the service of avoiding catastrophes) and the importance-tractability-neglectedness heuristic for prioritizing and computing between risks.

Quantification

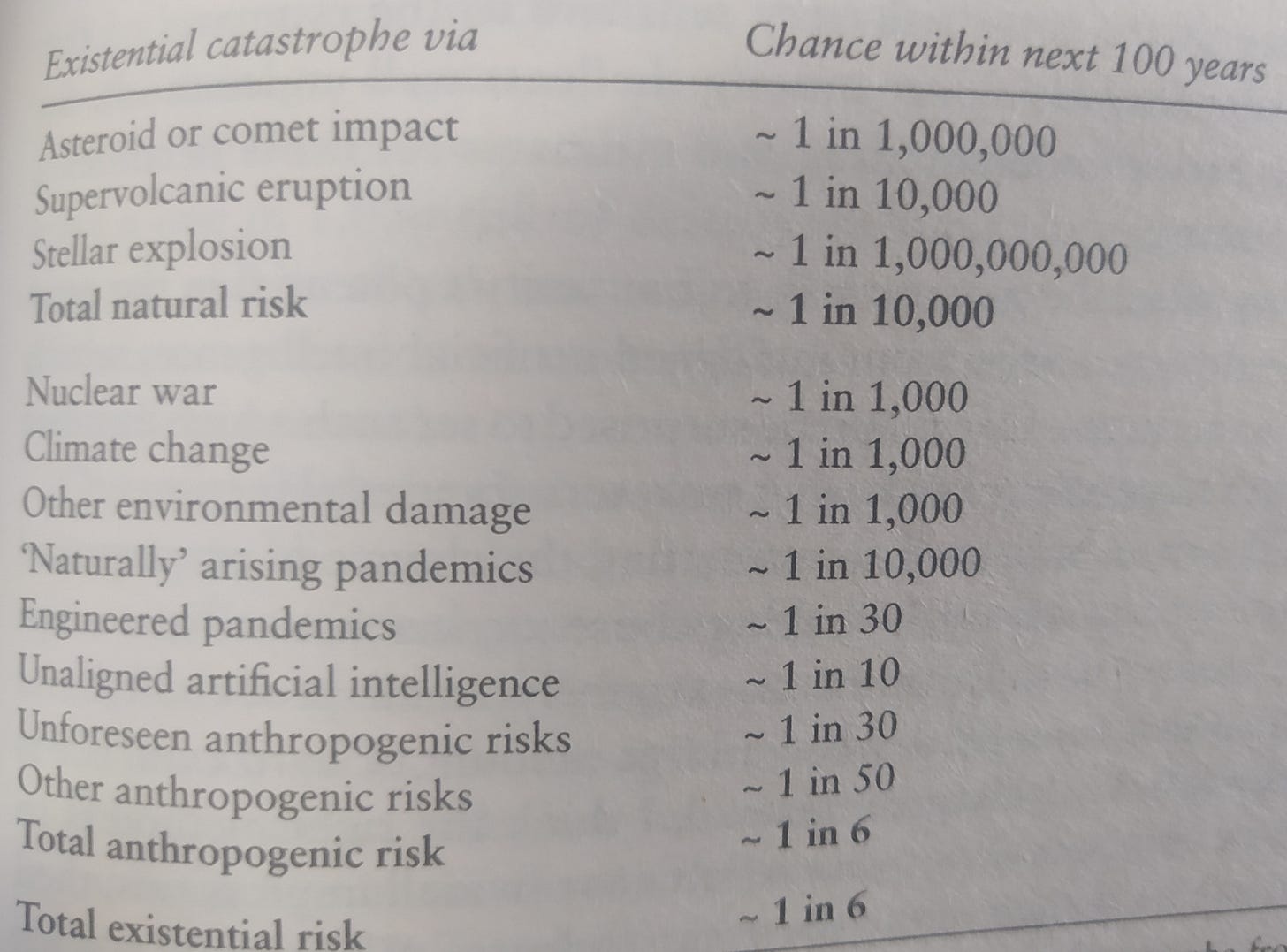

-We start with a defense of the use of numbers and percentages, instead of vague descriptors, to avoid language ambiguity, and with the underlying EA spirit of trying to quantify everything. This includes an approximate calculation of probabilities for different X-risks, summarized in the following table:

-Total matches what was stated in the beginning of the book (Russian roulette - a 1 in 6 chance for the next century)

-Ord is aware of how numbers (even more so, Bayesian speculations) ‘mystify’ and have a potential to mislead. This really resonates with my own background, where I am used to people bullshitting their pet beliefs through esoteric language (that’s most of the gig in the Humanities); he still makes the case that they clarify. He also starts with high probability for new, unknown risks (like AI) which some would dispute.

-Next we get a short take on how we can combine and calculate the risks, which leads to the notion of total existential risk and some counterintuitive ways of adding it up (they might overlap, so would be perhaps less if correlated).

Risk factors

-Besides the risks themselves, it might pay to think about risk factors and consider what they tell us with respect to alternative ways of choosing what risk(s) to work on.

-Some things (like war between great powers, undermining of civic institutions, economic crises…) aren’t X-risks per se, they can raise them by a big degree. This is in fact the definition of risk factors: those actions or events that increase X-risks.

-This provides a different strategy when dealing with risks: instead of considering each of them as a discrete box, you can center on factors that cut horizontally across them. You also have the opposite, factors that reduce risk(s).

-Some X-risks themselves constitute factors with respect to other risks.

Which risks to focus on

-One should try to manage risks as an investment portfolio, trying to choose a diversified list of choices that optimally minimize the risks.

-Classical EA way for this is considering cost effectiveness (how much bang you get for your buck), which follows a formula: Effectiveness = Importance x Tractability x Neglectedness

-Other criteria we might consider are how soon, sudden and sharp those risks are (i.e., coming in a short amount of time, taking off quickly, acting really fast without any warning signals). Another one would be targeted (which Ord thinks are the better choice right now) versus broad.